TSEA81 - Datorteknik och realtidssystem

Notera att dessa sidor enbart finns i engelsk versionTSEA81 - Computer Engineering and Real-time Systems

Lecture - 1 - Introduction

This lecture note gives an introduction to the course. An introduction to real-time systems is presented, and important real-time systems concepts, e.g. parallel activities, tasks, and mutual exclusion, are introduced.

Famous and infamous real-time systems

Introduction to the course

The course TSEA81 - Computer Engineering and Real-time Systems is given during the second part of the fall semester. Information regarding the structure and contents of the course is available via the course site.

The aim of the course is stated in the course syllabus:

- To develop an understanding of hardware/software interactions in computer systems with parallel activities and time constraints, and to develop basic skills for integration of software using a real-time operating system.

- give examples of hardware/software interactions for handling of parallel activities and time constraints

- explain properties of software with parallel activities

- describe the structure, and give examples from the implementation, of a real-time kernel

- summarize how the underlying computer architecture and instruction set influences the implementation of a real-time operating system

- design and implement software with parallel activities and time constraints

- use a real-time operating system

- exhibit basic skills in integration of software and a real-time operating system for a specific computer architecture

The course schedule contains additional information regarding the course contents, including the course schedule for lectures, assignments, and labs, including deadlines for the assignments.

The course book is given in the course syllabus as Realtidsprogrammering, from Studentlitteratur. It is only sold as an e-book at this time. It is however supposedly available as an ebook from the city library though.

The book Realtidsprogrammering will be referred to using the notation [RT]. The course schedule contains reading references, per lecture, in the form of references to book chapters and book sections in [RT].

Introduction to real-time systems

An introduction to real-time systems can be given e.g. as answers to the following questions:

- What type of systems are real-time systems?

- Where and when are real-time systems used?

- Why is it of interest to learn about real-time systems?

- Real-time systems are computer systems with special requirements regarding response times.

Response time requirements can be formulated in different ways, and with different kinds of precision. Some example systems, where response time requirements occur, are

- A DVD-player which shall start its playback after a button has been pressed, but also control the laser pickup so that the pickup follows the tracks of the DVD disc. These two tasks exhibit different kinds of response time requirements. There may e.g. be hard requirements regarding the timing aspects for the laser pickup control, e.g. that the pickup must be controlled periodically with a specified number of control actions per second, and more relaxed requirements regarding the response time for the start button, e.g. that the playback shall start between a half second and one second after the button is pressed.

- A cellular phone which shall perform a user defined action when a button is pressed, while at the same time process incoming and outgoing LTE traffic, 3G traffic and/or GSM traffic. Also in this system, different kinds of timing requirements may be specified, with respect to the processing of incoming signals and with respect to the response time when a button is pressed.

- An aircraft, with equipment for control of different subsystems, e.g. engines, ailerons, or landing gear. The control of these systems is often specified using strict requirements regarding response times e.g. that the landing gear must be down and locked within a specified time period after a command for this action has been issued. There may also be computer based control of e.g. speed and altitude, where timing requirements regarding control actions are strictly specified.

- An ATM machine, with timing requirements regarding the delivery of money, e.g. that the response time for delivery of money, after the user has inserted the security code, shall be 15 seconds plus minus a tolerance of 5 seconds.

- An operating system in a computer, with requirements on the response time for a user command, e.g. for starting a program, opening a window, or initiating a print job.

It can also be seen, from the above list, that there are requirements on a real-time system for performing more than one task at the same time. These type of tasks will be referred to as parallel activities.

Real-time systems are often classified into hard real-time systems and soft real-time systems. A hard real-time system is a system where the fulfilment of response time requirements are mandatory for the system to be considered functional. A soft real-time system is a system where the timing requirements are more relaxed, and where the fulfilment of these requirements is not directly coupled to the overall functionality of the system.

A computer program implementing a clock is an example of a real-time system. A program which implements a simple clock is described in Chapter 2 in [RT]. The example describes a small real-time program, and demonstrates how parallel activities can be used when implementing the program.

A real-time computer program needs functionality for time handling, and for creation and execution of parallel activities. A real-time operating system can be used for this purpose. A real-time operating system is an operating system designed to meet requirements of real-time systems. A minimum requirement of a real-time operating system can be stated as: it shall support predictable response times to external events, and it shall support the use of tasks. Real-time operating systems are further described in Section Real-time operating systems (RTOS).

Computer programs with parallel activities can be implemented without using a real-time operating system. It is possible to use an ordinary operating system, such as Windows or Linux, where parallel activities can be implemented as different programs, or implemented as activities within one program. Using an ordinary operating system gives however less possibility to handle strict requirements regarding response times when compared to a real-time operating system, which is more adapted for this type of requirements.

A program which fulfils requirements regarding response times can sometimes be implemented without using an operating system. This is common in small embedded systems, where e.g. a periodic interrupt can be used for activation of interrupt handlers, and where this activation is done so that the stated requirements regarding response times are fulfilled. These type of systems are further described in Section Foreground Background scheduling.

Support for parallel activities can be built into a programming language. This is the case for e.g. Java, but also in the Ada programming language. Parallel activities are then specified directly in the programming language, and there is no need for explicit calls to an operating system. This functionality is instead incorporated in the implementation of the language, e.g. the virtual machine of Java, which then handles the interface to an underlying operating system.

Considering the questions stated above, i.e.

- What type of systems are real-time systems?

- Where and when are real-time systems used?

- Why is it of interest to learn about real-time systems?

As an answer to question 3. one could say e.g. that knowledge in the area of real-time systems can be useful in most situations where a computer is used in an embedded system, i.e. where the computer is an integral part of a technical system. This type of systems are found e.g. in consumer electronics, medical electronics, chemical industries, manufacturing industries, airplanes, cars, etc.

Knowledge and skills in the area of real-time systems can be advantageous also for concurrent programming in general, e.g. during development of application programs with parallel activities, e.g. programs where user communication and access to other resources shall be performed in parallel. Examples of this type of programs include Internet browsers performing simultaneous user interaction and Internet communication, but also word processing programs performing simultaneous user interaction and grammar checking, or calculation intensive programs, where e.g. scientific computations are done in parallel with user actions, such as setting parameters for forthcoming computations etc.

Knowledge of real-time programming can also provide general insight into the functionality of different kinds of software with parallel activities. This can be advantageous e.g. when trying to understand the inner workings of an operating system or a thread-based application, e.g. during specification, documentation, or test of software.

Interrupts, processor registers, stacks

When a processor is interrupted, the flow of executions is temporarily changed. An interrupt handler starts its execution, and can be used for taking appropriate actions, relevant for the type of interrupt that occured.

Interrupts can be used for the purpose of reacting to external events, e.g. when buttons are pressed, when incoming data are present on a communication channel, or when a DMA transfer has been completed.

Interrupts can also be used for keeping track of time. If a periodic interrupt is used, this can serve as a time base in a real-time system. Such an interrupt makes it possible to perform activities periodically, e.g. executing a control algorithm every tenth interrupt, executing a sensor measurement algorithm every second interrupt, and executing a data storage activity every 100th interrupt

When an interrupt interrupts the ongoing flow of instructions, it must be secured that the execution can continue in a correct way after the interrupt handler has finished its execution. For this purpose, it is neccessary to save data that may be altered by an interrupt handler. It is also necessary to save data needed for the continuation of the execution, after the interrupt

The current state of an ongoing execution is captured in the values stored in the processor registers. By saving these registers, before the interrupt handler starts, it is possible to restore them again when the interrupt handler has finished its execution.

The data needed for continued execution after the interrupt, are represented by the address of the first instruction to be executed after the interrupt. By saving this address, when the interrupt occurs, and by restoring the saved value into the program counter after the interrupt handler has finished, the execution can continue, as if nothing happened, i.e. there is no direct effect of the interrupt on the execution flow of the program. Of course, the interrupt handler may have modified data used by the program, which may affect the execution flow after a while.

When storing data temporarily during an interrupt, a stack can be used. A stack is a LIFO (Last-in-first-out) list, typically located in the computer memory, and often addressed using a special register, often called the stack pointer register.

In a real-time system, interrupts are used for keeping track of time. Hence, interrupts are important for the timely execution of tasks in a real-time system.

Real-time systems and concurrency

Parallel activities are described in Section 2.1 in [RT], where two important aspects of parallel activities are described:

- Mutual exclusion, which means that a shared resource, in a program with parallel activities, may not be accessed by more than one parallel activity at a time. Shared resources are e.g. data that are shared between different parallel activities, but also shared hardware units, e.g. a printer, a keyboard or a graphical display, are considered as shared resources.

- Event handling, which means that certain events in a program with parallel activities must be handled in a controlled manner. A typical example is a program where parallel activities communicate using a shared buffer, and where a parallel activity writing data to the buffer shall wait when the buffer is full, and a parallel activity reading data from the buffer shall wait when the buffer is empty.

Parallel activities will mainly be referred to as tasks. Parallel activities are also denoted e.g. processes or threads. The word process is commonly used to denote a parallel activity in an operating system, e.g. Windows or Linux. A process of this kind can be a running program, but it can also be a parallel activity which belongs to the operating system, e.g. an activity for communication with external units, or an activity for user communication. The operating system allows several programs to execute concurrently, i.e. as parallel activities. A word processing program and an Internet browser can e.g. execute concurrently, on one computer.

The word thread is often used to denote an activity which executes within a process in an operating system. An Internet browser may e.g. use different threads, which execute concurrently, where one thread handles user input while at the same time another thread handles the external communication, e.g. for sending and receiving data over the Internet.

Many problem formulations related to parallel activities are applicable both for processes and for threads. Some examples are e.g. handling of shared resources and handling of events. For this reason, the word task is used in this course to denote a parallel activity in general, and specifically to denote a parallel activity in the Simple_OS real-time operating system.

Foreground Background scheduling

As described in Section Interrupts, processor registers, stacks, interrupts are used for the purpose of reacting to external events. This means that in a system with interrupts, at a given time instant, either an interrupt handler is executing, or if not, some other software is executing. Consider a software structure with one (endless) loop, here referred to as the main-loop, in combination with one or more interrupt handlers. In this system, the main-loop and the interrupt handlers share the CPU, and they execute concurrently, i.e. their executions are interleaved. From the perspective of an outside observer, this means that the computer is doing several things - it performs the computations in the main-loop and it serves the interrupts. And if the switching between these activities is performed sufficiently often, the activities can be regarded as parallel activities.

A system with one main-loop and one or more interrupt handlers is referred to as a foreground/background system. The actual execution in such a system can be described as a form of scheduling, where the scheduling policy is to run the main-loop continuously, while at the same time serving the interrupts using the interrupt handlers. The term foreground/background scheduling is therefore used to describe the system's execution.

In a foreground/background system, the main-loop and the interrupt handlers can be regarded as parallel activities. However, they are parallel activities of a different kind. One activity is a main-loop, and the other activities are interrupt handlers. If instead a real-time operating system is used, parallel activities of the same kind can be supported.

Real-time operating systems (RTOS)

A real-time operating system is an operating system designed for real-time requirements. A real-time operating system can handle parallel activities. These activities are often referred to as tasks. In some real-time operating systems, e.g. OSE, the parallel activities are denoted processes.

Some examples of real-time operating systems are:

- The real-time operating system OSE from Enea.

- Real-time operating systems from Green Hills Software, e.g. INTEGRITY.

- The real-time operating system VxWorks from Wind River.

- The real-time operating system uC/OS-III from Micrium.

For additional information about real-time operating systems, see e.g. the Wikipedia article List of real-time operating systems.

Tasks

Tasks are described in Section 2.3 in [RT], where the following definition is presented:

- A task is defined as a section of program code which can start its execution, temporarily suspend its execution, and then resume its execution at a later time instant, all in a controlled manner.

When a task switch occurs, the task to be suspended saves data which are associated with the task to be suspended, e.g. the current value of the program counter and current values of the processor registers. When this is done, the task to be resumed restores data which are associated with the task to be resumed, e.g. the value of the program counter and the values of the processor registers that were saved when this task was suspended, at an earlier time instant.

The saved values of the program counter and the processor registers can be regarded as data which are associated with a task. These data are often stored on a stack, which is a data area belonging to the task. Other data, also associated with a task, are often stored in a special data structure, here denoted Task Control Block and abbreviated as TCB.

The priority of a task can be stored in the TCB of the task. The priority is used during a task switch, to determine the task to be resumed. This can be done by selecting the task with the highest priority, among the tasks which are ready for execution.

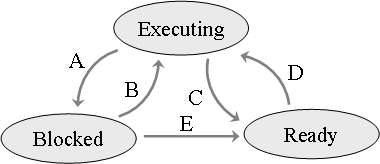

A task can be in different states. An example showing such states, and possible transfers between the states, is given in Figure 1.

Figure 1. A visualization of three task states: Blocked, Executing, and Ready.

The state transfers in Figure 1 are commented as follows:

- A means that a task leaves the state executing and enters the state blocked. This can e.g. happen when a task wants to use a shared resource, and the resource is used by another task, and therefore not available. The task is then placed in the state blocked, and must wait until the resource is available again, at some later time.

- B means that a task leaves the state blocked and enters the state executing. This can happen e.g. when a shared resource, for which the task is waiting, becomes available, and the task is allowed to execute immediately when this happens.

- C means that a task leaves the state executing and enters the state ready. This can happen if another task, with higher priority than the currently executing task, becomes ready for execution and is allowed to execute directly. This may occur when a shared resource, for which the task with higher priority is waiting, becomes available. The task with higher priority then becomes ready for execution, i.e. it leaves the state blocked and enters the state ready, and since it has higher priority than the currently executing task, a task switch is initiated. This task switch makes the running task leave the state executing and enter the state ready, while the task with higher priority is transferred from the state ready to the state executing.

- D means that a task leaves the state ready and enters the state executing. A situation where this can occur is described in the previous item. It can also happen when the currently executing task is being blocked, e.g. due to a shared resource not being available, and there is another task which is ready for execution. This task is then transferred from the state ready to the state executing, and the currently running task is transferred from the state executing to the state blocked.

- E means that a task leaves the state blocked and enters the state ready. This can happen when a shared resource, for which a task is waiting, becomes available, but the task waiting for the resource is not allowed to execute immediately when this occurs, e.g. due to the task having lower priority than the currently executing task.

Assignment 1 - Introduction, Shared Resources

The first assignment, 1 - Introduction, Shared Resources, gives an introduction to real-time programming using pthreads.

The first assignment also gives an introduction to the concept of mutual exclusion.

Mutual Exclusion

Mutual exclusion means that only one task at a time is allowed access to a shared resource. This is a common requirement when handling data that are shared between different tasks, and it ensures that two tasks are not allowed to read or write data in an uncontrolled manner. It is also important to require mutual exclusion when the shared resource consists of hardware, e.g. a serial port or a graphical display.

In some situations, more than one task may be allowed to simultaneously access a shared resource. This is the case e.g. when more than one task needs to read data from a file, which then can be allowed as long as no task is allowed to write to the file while the reading is in progress.

Mutual exclusion is illustrated in Assignment 1 - Introduction, Shared Resources, where a mutex is used for implementing mutual exclusion in C using pthreads.

Mutual exclusion is also illustrated in Chapter 3 in [RT]. Further information regarding mutual exclusion can be found in Section 5.1.1 in [RT].

Mutexes

A shared resource can be protected using a mutex. A pthreads_ mutex, named Mutex, can be declared as

/* a mutex, to protect the common variables */ pthread_mutex_t Mutex;

A mutex must be initialized before it is used, by doing the following:

/* initialise mutex */

pthread_mutex_init(&Mutex, NULL);

The second parameter can be used to specify various attributes for the mutex. However, in this course the default attributes are sufficient and NULL can be passed as the second parameter. A critical region is a segment of code where a shared resourse is used. When using a mutex for protecting a shared resource, critical regions shall start with a lock-operation on the mutex protecting the shared resource. In pthreads, this is done as

/* reserve shared resource */

pthread_mutex_lock(&Mutex);

When using a mutex for protecting a shared resource, critical regions shall finish with an unlock-operation on the semaphore protecting the shared resource. In pthreads, this is done as

/* release shared resource */

pthread_mutex_unlock(&Mutex);

If you are interested in more information about the various pthread library calls you can call up the documentation for these by running the man command in a Linux terminal (e.g. man pthread_mutex_lock).

Informationsansvarig: Kent Palmkvist

Senast uppdaterad: 2017-10-27

LiU Homepage

LiU Homepage