We are six students that studies Applied physics and Electrical Engineering and Computer Engineering at Linköping University.

The process of finding one’s own motion is known as ego-motion estimation. In this project a system was implemented which estimates the ego-motion of a Ladybug 3 camera and the vehicle it is mounted to.

Structure from motion is a fairly well developed concept. It has successfully transitioned from a scientific endeavour into engineering, although research is ongoing. The literature is vast and varying and so this study is necessarily limited to a mere fraction of the available works. Tardif et al created a system for determining the motion of a vehicle based on the video from a PointGrey Ladybug 2 camera combining several standard methods, but with some novel and critical design choices. Their results are very impressive, and so it forms the foundation of this project.

These are some examples of how the video output from the Ladybug camera looks like.

A Ladybug 3 omnidirectional camera, producing 6 x 2 MP video at 16 fps provides the input signal to this system. The video frames from the camera are recorded into a proprietary Point Grey stream file. In order to use the data, it is converted offline to a large set of jpeg-images by a simple tool running on Windows, making straightforward use of an application library provided by the manufacturer.

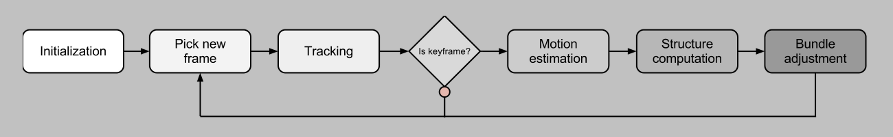

Tracking is the first step in the main ego-motion algorithm. First the feature points are detected and computed in the current frame. These feature points are matched with the feature points in the previous keyframe and the essential matrix is estimated using these matches. This new essential matrix is used to refine the matches, those correspondences that do not fulfill the epipolar constraint are dropped. The last step is to decide whether the frame is a keyframe or not. This means that at least 95% of the feature points must have moved at least a distance of a set threshold between two contiguous keyframes. If the frame fails this test it is dropped and the tracking algorithm is repeated for the next frame. If the frame is considered a keyframe the landmarks are updated and the next step in the algorithm is performed.

For pose estimation the perspective n-points algorithm, PnP, is used. The problem can be thought of as finding the pose that minimizes the reprojection error of all the known 3D points. This is a nonlinear least-squares problem which can be solved by different optimization methods, one of the most popular ones being Levenberg-Marquardt. The third party library levmar is used as a solver in the PnP optimization.

The structure computation consists of the triangulation of the landmarks’ 2D points and of several checks to ensure good triangulated 3D points. The triangulation uses all observed projections of the 3D point and calculates the 3D point that minimizes the reprojection error for all frames where it has been observed, so called optimal triangulation.

Bundle adjustment is used to reduce the amount of accumulated error in the estimated pose and triangulated points. For bundle adjustment, the nonlinear least squares minimizer Ceres Solver is used. To make the bundle adjustment computations faster, only a window of the last n keyframes are used. Since only the last n frames are considered in bundle adjustment, the solution can be inconsistent (up to a transformation) with earlier points and poses. To remedy this problem, an additional constraint is used, which penalizes changing of the parameters of the first two poses in the window, locking the solution to keep the scale, rotation and translation of poses and, in continuation, points which are no longer considered in bundle adjustment.