Vision Pipeline

The major component of the software system is the vision pipeline. The vision pipeline is a highly configurable software system and can be configured from file in the sense that algorithm parameters can be tuned and algorithm execution orders changed just by editing the configuration file.

Overview of the image processing pipeline with the arrows representing data flow between modules.

Configuration file system

In order to allow easy tuning and change of different system settings, the system has an advanced configuration file system. Most relevant parameters can be specified via the configuration file, without any need of re-compiling the system. Some examples of possible system settings that can be managed from the configuration file are:

- Target web server

- Sensor types

- What algorithms to run and in what order

- Image processing parameters

I/O

The system I/O module manages all system input/output, i.e. sampling the sensors and posting output data on the web server specified by the configuration file. Currently, support exists for all OpenCV-compatible cameras as well as the Microsoft Kinect depth sensor. The module is designed to be as modular as possible, allowing for a relatively easy integration of new sensor types into the system. The I/O module also supports running several sensors in parallel, enabling the system to monitor the occupancy of a room with more than one entrance.

Human Segmentation

The human segmentation is based on the assumption that human heads are distinguishable modes in the depth image and that people moving very close to each other seldom differ much more than a head in height. The later assumption seem to hold more often than one might think, as it is supported by over three hours of test data. The segmentation is realized by a series of thresholds, morphological operations, smoothing, contour finding & masking and searches for local maxima. Some of the intermediate steps are illustrated in the figure below.

An example when the later assumption is used to find multiple humans when occluded is shown below.

Tracking

The tracking algorithm performs six different steps for each frame iteration. The tracker has a list with potential objects from the current frame and a list with current objects found by earlier image processing steps. The tracker pairs closest objects with each other from previous frame to the current frame. The tracker handles occlution, outliers and noise.

The image below shows one person (green box) who has lived long enough to be considered a person, two (red boxes) who’s just been found and one (blue box) which is a person who’s just been lost.

People Entry/Exit Counting

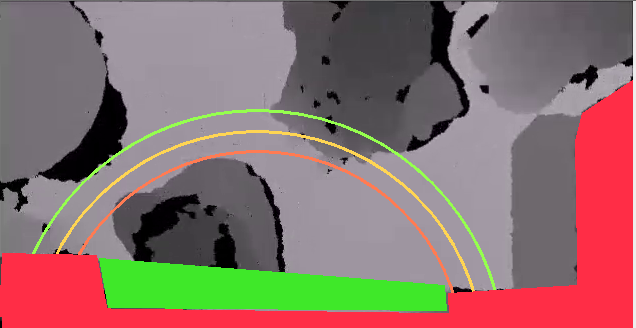

To to be registered as an entering or exiting human objects has to fulfill some requirements. To be considered as entered, an object has to be found in a door area and pass three circle lines in addition to have existed a minimum of a set amount of frames. To be registered as exited an object has to have existed a minimum of a set amount of frames and disappear inside a door area, while also at least once passed the three lines.

In the image below the door area is marked as green an the checkpoint lines are the circle lines.

Queue Detection

To determine which pairs of persons that are in a queue together, we need some measure of “queue closeness” that takes both the persons’ velocities and positions into account. If they are indeed in the same queue, they should both be standing still relatively close to each other, or be traveling slowly along roughly the same path. We try to determine what this path would be by fitting a cubic Beziér curve to the pair, and use the length of the curve as our estimate of their “queue closeness”. Such a curve is completely determined by two endpoints, the velocity at the endpoints, and a scale factor related to the parametrization of the curve. In practice, this parameter sets the relative impact of the velocities on the curve length. The velocities themselves are determined using a simple Kalman filter.

Video frames in which pairs of persons are connected by short enough curves are assumed to contain a visible queue.

Queue Severity Estimation

An estimate of the severity of the queue situation into the observed room, is made by considering the number of frames with detected queues in a window of time, and optionally also the amount of people in the room.